SQLFlow-Submitter¶

With SQLFlow-Submitter, you will be able to submit SQL and metadata to the SQLFlow server, to create SQLFlow Jobs as well as to generate data lineage.

Prerequisites¶

-

SQLFlow-Ingester package

Download our latest SQFlow-Ingester package here. * Java 8 must be installed and configured correctly.

Installation¶

1 2 3 | |

- Under Linux & Mac to add permissions

1 | |

Usage¶

(1) (1) (1) (1) (1) (1) (1) (1).png)

After decompressing the package, you will find submitter.bat for Windows and submitter.sh for Linux & Mac.

Windows ¶

1 | |

(1) (1) (1) (1).png)

Linux & Mac¶

1 2 3 4 5 6 7 | |

.png)

After successfully executing the Submitter, check the Job list on UI and you will find the job submitted by the Submitter, with the Job name configured in the configuration file.

Cron Job¶

If you are on Linux or Mac, you can schedule the submitter with crontab to create a cron job.

1 | |

In the editor opened by the above command, let's say now we want to schedule a daily cron job, add the following code

1 2 3 4 5 | |

Please check this document for more information about cron and you can learn the cron grammar with Wikipedia.

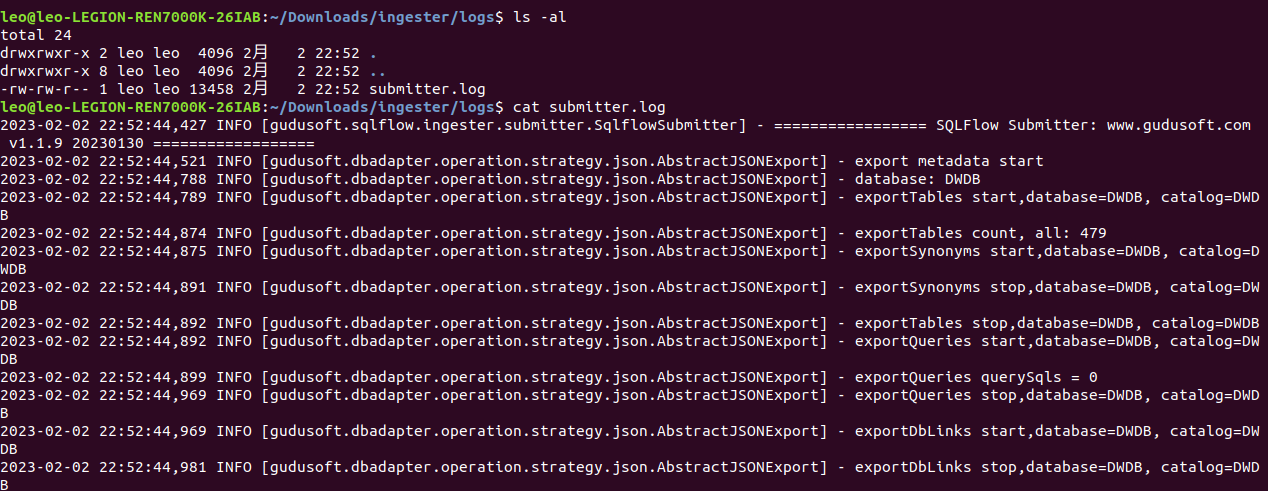

SQLFlow-Submitter Log¶

The logs of the submitter are persisted under log folder.

Common Logs¶

- file is not a valid file.

The file does not exist or the file address cannot be found.

- sqlScriptSource is valid, support source are database,gitserver,singleFile,directory

The sqlScriptSource parameter is incorrectly set. Data sources are only supported from databases, remote repositories, and files and directories

- lineageReturnFormat is valid, support types are json,csv,graphml

Parameter lineageReturnFormat is incorrectly set. The data lineage result obtained can only be in JSON, CSV, and GraphML formats

- export metadata in json successful. the resulting metadata is as follows

Exporting metadata from the specified database succeeded.

- This database is not currently supported

Parameter databaseType set error, at present only support access, bigquery, couchbase, dax, db2, greenplum, hana, hive, impala, informix, mdx, mssql,sqlserver,mysql,netezza,odbc,openedge,oracle,postgresql,postgres,redshift,snowflake,sybase,teradata,soql,vertica,azure

- db connect failed

The metadata fails to be exported from the specified database. If the metadata fails to be exported, check whether the database connection information in the dataServer object is correct

- export metadata in json failed

Failed to export metadata from the specified database. Check whether the user who logs in to the database has the permission to obtain metadata

- metadata is empty

Exporting metadata from specified database is empty, please contact me for processing

- remote warehouse url cannot be empty

The URL in the gitServer parameter cannot be empty

- remote warehouse pull failed

Failed to connect to the remote warehouse. Check whether the remote warehouse connection information is correct

- connection failed,repourl is the ssh URL

The remote repository address is incorrect. Please check whether it is a Git address

- remote warehouse file to zip successful. path is:xx

Pull to a local storage address from a remote repository

- get token from sqlflow failed

Failed to connect to SQLFlow. Check whether connection parameters of sqlflowServer are correct

- submit job to sqlflow failed, please input https with url

Failed to submit the SQLFlow task. Check whether the URL and port of the sqlflowServer are correct

- submit job to sqlflow failed

Failed to submit the SQLFLOW task. Check whether the sqlFLOW background service is started properly

- get job to status failed

After a job is submitted to SQLFLOW, SQLFlow fails to execute the job

- export json result failed

Description Failed to export Data Lineage in JSON format from SQLflow

- export csv result failed

Description Failed to export Data Lineage in csv format from SQLflow

- export diagram result failed

Description Failed to export Data Lineage in diagram format from SQLflow

- submit job to sqlflow successful

The job is successfully submitted to SQLFlow, and the basic information about the submitted job is displayed

- [database: 0 table: 0 view: 0 procedure: 0 column: 0 synonym: 0]

Statistics the amount of metadata exported from the specified database

- the time taken to export : 0ms

Time, in milliseconds, to export metadata from the specified database

- download success, path: xxx

Local storage address of Data Lineage returned after successfully submitting a job to SQLFlow

- job id is : xxxx

job id from sqlflow , log in to the SQLFlow website to view the newly analyzed results. In the Job List, you can view the analysis results of the currently submitted tasks.

- The number of relationships in this task is too large to export this file, please check data lineage on SQLFlow platform.

When the task uploaded to SQLFlow is too large or the number of rolls parsed by SQLFlow is too large, SQLFlow-Submitter cannot obtain CSV files from it.

Configuration File¶

Configuration sample¶

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | |

Snowflake query history¶

For the query history of Snowflake, we will need the following settings:

1 2 3 4 5 | |

For more details, check configuration table.

Configuration Fields¶

| Field Name | Description | Sample Value |

|---|---|---|

| $databaseServer | Contains the DB information and DB related settings | N/A, Object |

| $databaseServer.hostname | DB server hostname, can be the IP address or the domain name if using SQLFlow On-Premise | DESKTOP-MLUR76N\SQLEXPRESS or 115.159.115.32 |

| $databaseServer.port | DB server port number | 1433 |

| $databaseServer.database | The name of the database instance to which it is connected | For azure,greenplum,netezza,oracle,postgresql,redshift,teradata databases, it represents the database name and is required, For other databases, it is optional. |

| $databaseServer.extractedDbsSchemas | List of databases and schemas to extract, separated by commas, which are to be provided in the format database/schema; Or blank to extract all databases. database1/schema1,database2/schema2,database3 or database1.schema1,database2.schema2,database3 When parameter database is filled in, this parameter is considered a schema. And support wildcard characters such as database1/*,*/schema,*/* | When the connected databases are Oracle and Teradata, this parameter is schemas, for example: extractedDbsSchemas: "HR,SH" When the connected databases are Mysql , Sqlserver, Postgresql, Snowflake, Greenplum, Redshift, Netezza , Azure, this parameter database/schema, for example: extractedDbsSchemas: "MY/ADMIN" |

| $databaseServer.excludedDbsSchemas | This parameters works under the resultset filtered by extractedDbsSchemas. List of databases and schemas to exclude from extraction, separated by commas database1/schema1,database2 or database1.schema1,database2 When parameter database is filled in, this parameter is considered a schema. And support wildcard characters such as database1/*,*/schema,*/* | When the connected databases are Oracle and Teradata, this parameter is set the schemas, for example: excludedDbsSchemas: "HR" When the connected databases are Mysql , Sqlserver, Postgresql, Snowflake, Greenplum, Redshift, Netezza , Azure, this parameter is set database/schema, for example: excludedDbsSchemas: "MY/*" |

| $databaseServer.extractedStoredProcedures | A list of stored procedures under the specified database and schema to extract, separated by commas, which are to be provided in the format database.schema.procedureName or schema.procedureName; Or blank to extract all databases, support expression. database1.schema1.procedureName1,database2.schema2.procedureName2,database3.schema3,database4 or database1/schema1/procedureName1,database2/schema2 | extractedStoredProcedures: "database.scott.vEmp*" or extractedStoredProcedures: "database.scott" |

| $databaseServer.extractedViews | A list of stored views under the specified database and schema to extract, separated by commas, which are to be provided in the format database.schema.viewName or schema.viewName. Or blank to extract all databases, support expression. database1.schema1.procedureName1,database2.schema2.procedureName2,database3.schema3,database4 or database1/schema1/procedureName1,database2/schema2 | extractedViews: "database.scott.vEmp*" or extractedViews: "database.scott" |

| $databaseServer.enableQueryHistory | Fetch SQL queries from the query history if set to true default is false. | true/false |

| $databaseServer.queryHistoryBlockOfTimeInMinutes | When enableQueryHistory:true, the interval at which the SQL query was extracted in the query History,default is 30 minutes. | 15 |

| $databaseServer.sqlsourceTableName | Check here | query_table |

| $databaseServer.sqlsourceColumnQuerySource | Check here | query_source |

| $databaseServer.sqlsourceColumnQueryName | Check here | query_name |

| $databaseServer.metaStore | The metadata that is fetched from a Hive or SparkSQL metaStore. The metaStore uses the RDBMS such as MySQL to save the metadata. Only Hive metaStore is supported in current version. when this option is set to | Hive |

| $databaseServer.authentication | Authentication method to connect to the Database server, only windows user is required to give the fixed value windowsuser . | windowsuser |

| $sqlFlowServer | Contains the SQLFlow server information | N/A, Object |

| $sqlFlowServer.server | SQLFlow server address | https://api.gudusoft.com |

| $sqlFlowServer.serverPort | SQLFlow server port | 443 |

| $sqlFlowServer.userId | Account userId | gudu|0123456789 |

| $sqlFlowServer.userSecret | Account user secret | eyJhbGciOiJIUzIxxxxxxx |

| sqlScriptSource | You may collect SQL scripts from various sources such as database, Github repo, file system. This parameter tells SQLFlow-Submitter where the SQL scripts come from. Only database is supported in current version | database |

| lineageReturnFormat | SQLFlow-Submitter will fetch the data lineage back to the directory where the SQLFlow-Submitter is running. Those data lineage results are stored in the This parameter specifies which kind of format is used to save the data lineage result. Available values for this parameter:

| json |

| lineageReturnOutputFile | path to output the data lineage | "lineageReturnOutputFile":"/user/data.csv" |

| databaseType | the SQL type of the scripts | mysql |

| taskName | Job name | |

| jobNameToId | 0 or 1, if set to 1, job can be submitted for multiple times | 1 |

| jobType | job type, Simple job or Regular job Check here for more info | simple |

| donotConnectToSQLFlowServer | If donotConnectToSQLFlowServer is set to 1, the metadata file is not uploaded to SQLFlow. the default is 0 | 0 |

Process SQL queries in a database table¶

This feature will extract SQL queries saved in a database table, metadata of the same database will also be extracted into the same JSON file.

sqlsourceTableName¶

Name of the table where SQL queries are saved.

table name: query_table

| query_name | query_source |

|---|---|

| query1 | create view v1 as select f1 from t1 |

| query2 | create view v2 as select f2 from t2 |

| query3 | create view v3 as select f3 from t3 |

If you save SQL queries in a specific table, one SQL query per row.

Let's say: The column query_table.query_source stores the source code of the query. We can use this query to fetch all SQL queries in this table:

1 2 | |

By setting the value of sqlsourceTableName and sqlsourceColumnQuerySource,sqlsourceColumnQueryName , SQLFLow-Submitter can fetch all SQL queries in this table and send it to the SQLFlow to analzye the lineage.

In this example,

1 2 3 | |

Please leave sqlsourceTableName empty if you don't fetch SQL queries from a specific table.

sqlsourceColumnQuerySource¶

In the above sample:

1 | |

sqlsourceColumnQueryName¶

1 | |

This parameter is optional, you don't need to specify a query name column if it doesn't exist in the table.